My Elevator Pitch

Computers are devices built to perform computations based on data given to them, the grand majority of them using a base-two number system called binary. While human beings are capable of performing advanced calculation, we are error-prone and are unable to upgrade our organic hardware with newer designs (yet). Computers have been designed to be “programmed” or instructed to perform different calculations without having to be re-wired or changed physically. This design has allowed computers to assume responsibility for and transform a vast number of tasks, workloads, and services in human civilizations.

A Quick History of Computers

Update 04/15/2019 – My 5-year-old found The Story of Coding (affiliate link – supports my knowledge quest) at his school book fair. I was surprised to find it was not just a story of coding, but a history of computers, the Internet, the web, and code. It’s full of some of the same factoids I have laid out below, but with better pictures, structure, and concise prose. It’s written for children but I immediately took it to my wife (and later parents) and told her to read it so she’d know why I’ve been so excited and passionate about technology all these years. This book is great for kids but also great for someone that’s maybe slightly interested in technology and wants a 5-minute overview. Now back to my original post:

(Got most of this from shamelessly from Wikipedia, see sources below)

Early history is peppered with interesting archaeological artifacts that suggest humans use tools for mathematical calculations, starting with the Ishango Bone (20,000 B.C.E.) , to the abacus (2,500 B.C.E.), to the Antikythera Mechanism (125 B.C.E.). In addition to hardware, humanity achieves logical milestones before the common era such as the first use of binary in India, negative numbers in China, and the first computation resembling a “program” in the Roman Empire.

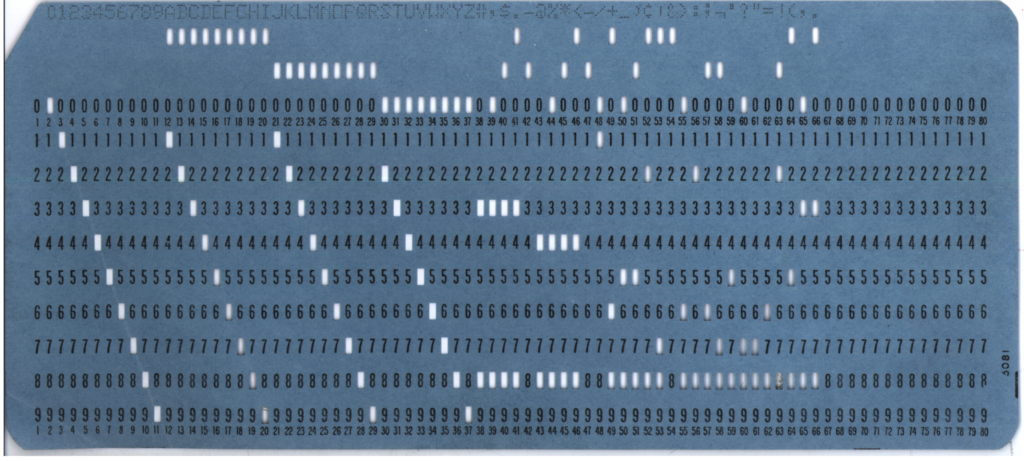

Achievements in mathematics and engineering continue until some major breakthroughs in the 1800s – in 1822 English mathematician Charles Babbage proposes the “Analytical Engine”, along with his counterpart Ada Lovelace (English mathematician) who developed a method for the machine to move beyond simple calculation. She proposes a way for the machine to calculate Bernoulli numbers in what is arguably the first “algorithm” developed for a computer. In 1847 English mathematician George Boole develops Boolean algebra, a logic system that forms the basis of binary computers today. In 1890 U.S. Census employee Herman Hollerith founds the Tabulating Machine Company (now known as IBM) and develops a method to store data readable by machines, using punch cards.

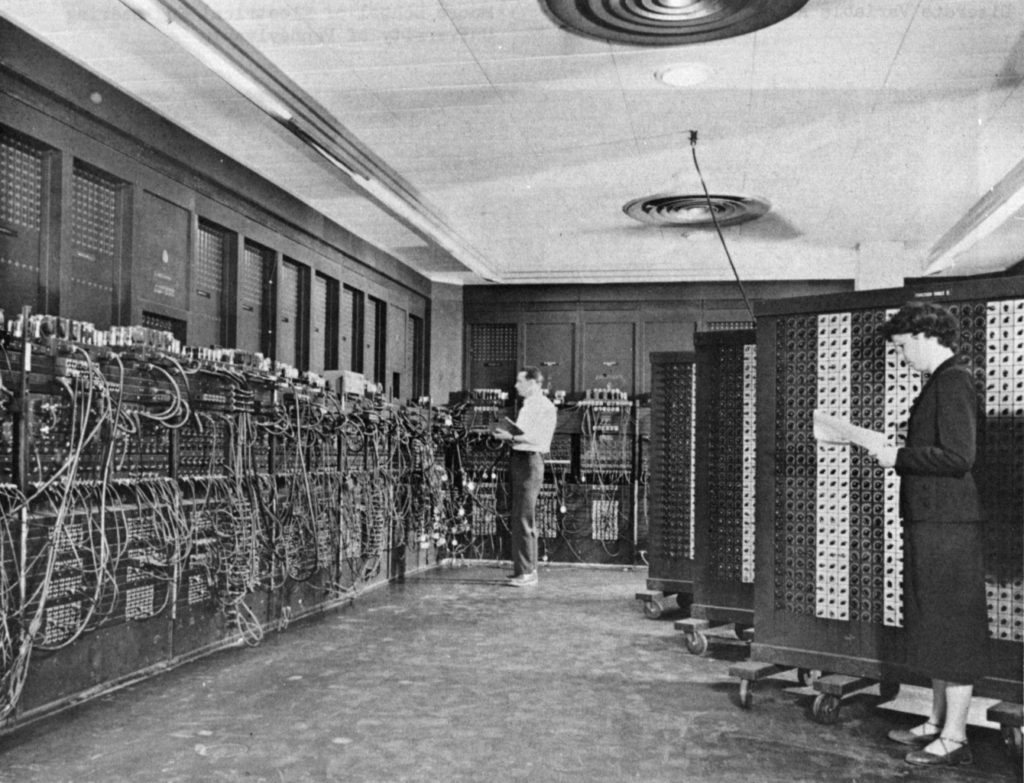

American engineers John W. Mauchly and J. Presper Eckert construct ENIAC, a completely electronic programmable computer using vacuum tubes for computation. Over the next 10 years, many computers are built in various developed countries, including designs using transistors, a marked improvement over vacuum. A new concept is conceived of by American genius mathematician Grace Hopper – to convert English words into “machine code” executable by computers using a program called a “compiler”. Programming “languages” are created, including FORTRAN and COBOL. In 1958, the integrated circuit is invented at Texas instruments by engineer Jack Kilby and physicist Robert Noyce (who went on to co-found Intel).

As computers moved to integrated circuits and thus smaller sizes during the 1960’s and 1970’s, many achievements were made: BASIC language was developed, packet switching and ARPANET are developed by ARPA, the Unix operating system and C programming language are created, Intel develops dynamic RAM chips, Ethernet (Xerox) and the TCP/IP protocol suite (ARPA) are created. In 1975, Bill Gates and Paul Allen create a microcomputer implementation of BASIC, and go on to found Microsoft. In 1976 Apple is founded by Steve Jobs and Steve Wosniak. In the same year, Whitfield Diffie and Martin Hellman publish the findings of their research into asymmetric cryptography in a paper entitled “New Directions in Cryptography”. In 1977 Ron Rivest, Adi Shamir, and Leonard Adleman create the RSA cryptosystem. Intel creates the first x86 microprocessor in 1978, the current global CPU architecture standard as of writing, 2019.

Photo by Ruben de Rijcke [CC BY-SA 3.0], via Wikimedia Commons

In 1981, IBM announces the IBM personal computer, and partners with Microsoft to create the operating system, MS-DOS 1.0. In the same year RFCs 791 and 793 establish Internet Protocol and Transmission Control Protocol, respectively, as open standards. Richard Stallman creates the GNU Project in 1983 as a free and open alternative to proprietary UNIX. The same year sees RFC 881 create the Domain Name System. Microsoft Windows is launched to little fanfare in 1985. To end the decade with a bang, in 1989 Tim Berners-Lee and his team at European Organization for Nuclear Research (known as CERN) develop a concept they call the “World-Wide-Web”, complete with HTML, HTTP, a test web server and browser client.

In 1991 Linus Torvalds announces his new kernel on Usenet, the next year it is integrated into Richard Stallman’s GNU project while it waited for its own kernel to be developed. Over the next two decades, the operating system known as “Linux” would come to dominate many computing markets and pave the way for the proliferation for free and open source software. Linus changes Linux’s initial license to GNU General Public License (GPL). In 1993 the Moving Picture Experts Group (MPEG) designs an audio format for its MPEG-1 and MPEG-2 standards called MP3. 1995 is a big year – Sun Microsystems creates the Java programming language, Microsoft releases Windows 95, and Netscape announces Javascript development. In 1997 Deep Blue beats World Chess Champion Garry Kasparov in a chess match.

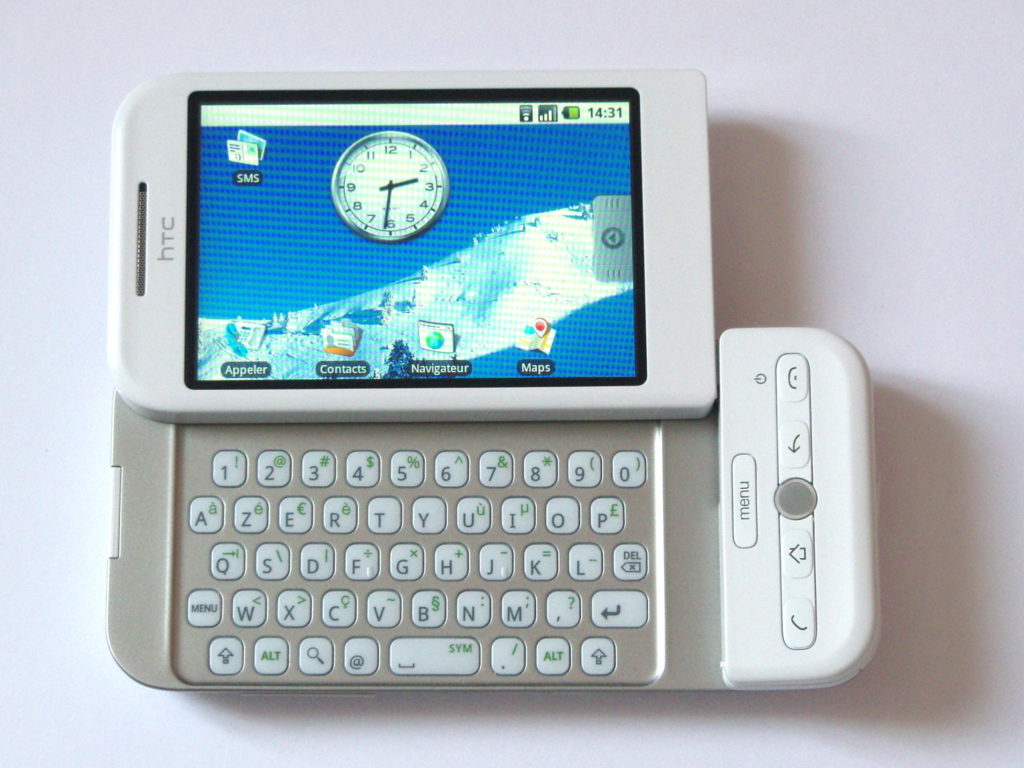

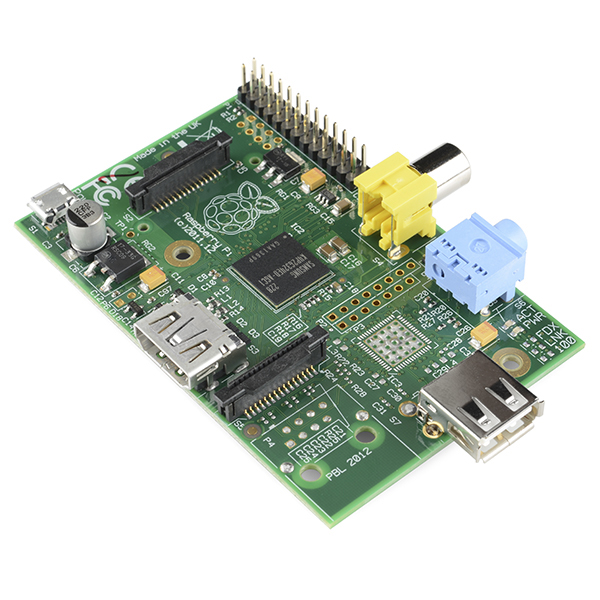

In the year 2000, both Intel and AMD release CPU’s clocking in at 1Ghz. RSA security releases the RSA algorithm to public domain, making unbreakable asymmetric encryption and authentication algorithms fully available to the general public. In 2001, Mac OSX by Apple and Windows XP by Microsoft are released. 2007 sees Apple’s first iPhone. In 2008 the first version of Google’s acquired Android Operating System is released. In 2009 Windows 7 is released, along with the source code for Bitcoin. The Raspberry Pi, a device about the size of a credit card, is created in 2012 as an educational tool for kids to learn computer programming, for a price of $35.

What Computers Do

This is actually kind of an interesting question, with seemingly limitless answers due to a computer’s programmable design. My day is currently made up of many activities that are in some way assisted by computers. In my personal life I communicate with my family and friends using SMS, I keep track of events using my phone’s calendar, I search for tech articles on Google. My 5-year-old son likes Minecraft and Youtube. At work I use Microsoft Office 365 for email, and Cisco Webex for meetings. What I’ve discovered that those are just the uses, not what computers actually do. The answer is just one thing – computers follow instructions. In computer land, instructions are called code. This is not a coding blog, I just include some code here and there to illustrate a point. The “Hello World” program that kicks off any coding course really blows my mind when I think about it:

#include <stdio.h>

int main() {

printf("Hello World!");

return 0;

}

I can type a couple of quick commands (“helloworld” being a text file containing the above code):

make helloworld ./helloworld

I get this output on the screen (the same commands work on a Mac, or press F5 in Microsoft Visual Studio on Windows):

Hello World!

What is mind-blowing for me about the hello world program is the printf(“Hello World!”); line. I can literally change what’s in-between the quotes to whatever I want, and the computer will spit it out for me. I could put another phrase in there, like “Hello, I’m Johnny Cash” or “Computers are cool”, or something elaborate like the complete works of Shakespeare. The computer will work tirelessly and thanklessly for me until it completes its task. That’s some power.

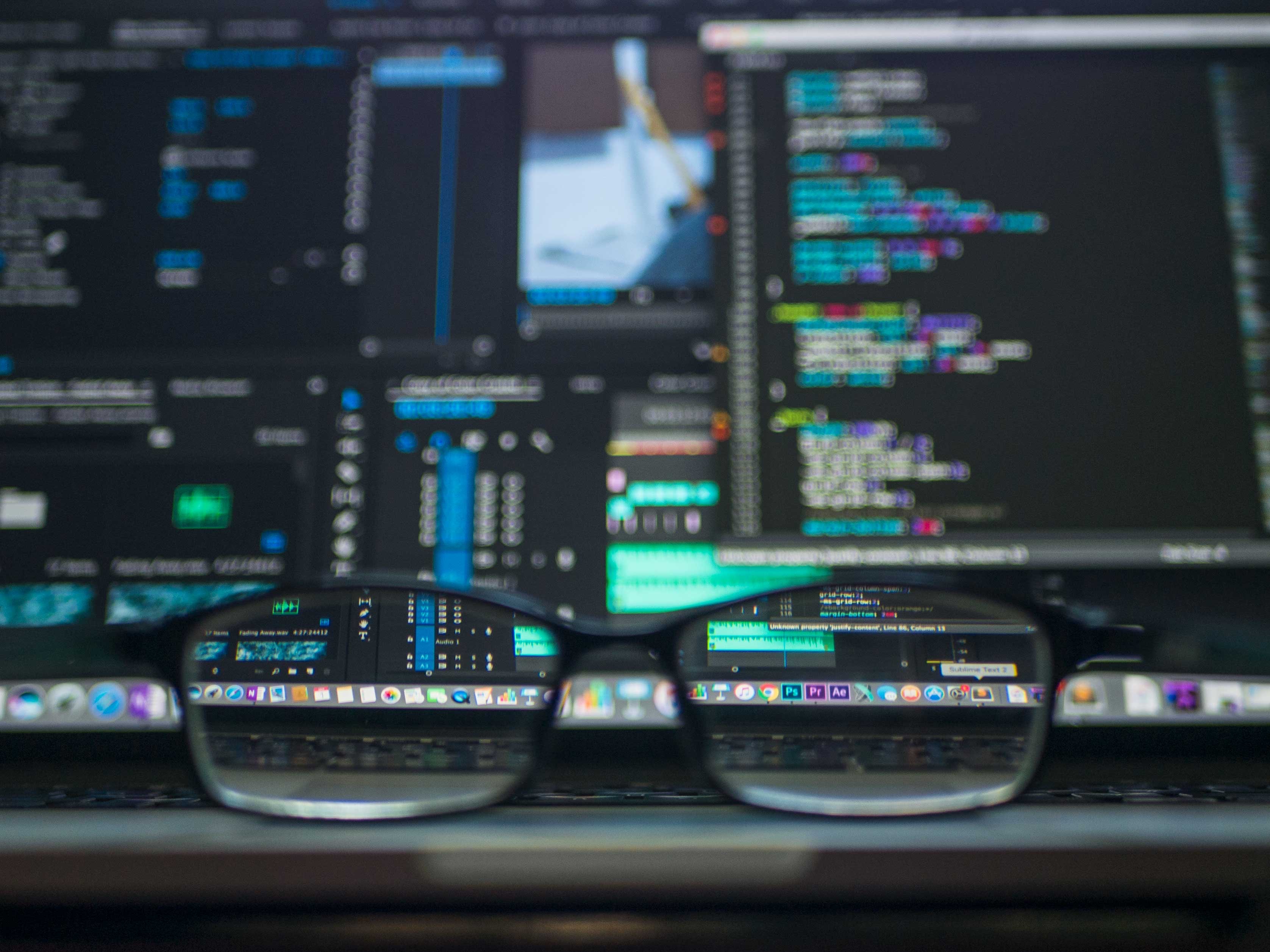

The Current State of the Computer

You may be wondering, what happened in the 2010’s? Some of the coolest advances in technology have happened after 2010, surely there would be something of note on computing to put in the history section. From my point of view here on February 26th, 2019, advances in hardware and computational power seemed to have slowed compared to the decades before it. Perhaps this slowed growth is due to nearly every American owning a computer or some sort (desktop, tablet, phone, etc), according to Pew Research in 2018.

I’d wager that the major innovation is going not into individual computers anymore, but into to services (think Office 365, Netflix or Facebook). Gartner, Inc.’s 2018 survey for cloud computing points out that cloud services continue to grow by big percentage points, while desktop and laptop PC sales continue to slump, according to another Gartner, Inc. 2018 survey for PC sales. The growth of these cloud services are fueled by data provided by the cloud’s cousin, the Internet of Things. Gartner’s 2016 IoT survey tell us that in 2016 the number of things connected was at 6 billion, expect to be at 8.4 billion by 2018. I’m excited to see what future innovations will bring in the years to come.

Sources

https://homepage.cs.uri.edu/faculty/wolfe/book/Readings/Reading03.htm

https://en.wikipedia.org/wiki/Timeline_of_computing_hardware_before_1950

https://en.wikipedia.org/wiki/Timeline_of_computing_1950%E2%80%931979

https://en.wikipedia.org/wiki/Timeline_of_computing_1980%E2%80%931989

https://en.wikipedia.org/wiki/Timeline_of_computing_1990%E2%80%931999

https://en.wikipedia.org/wiki/Timeline_of_computing_2000%E2%80%932009

https://en.wikipedia.org/wiki/Timeline_of_computing_2010%E2%80%932019